AI in Cybersecurity: Benefits, Risks, and Future Strategies for IT Leaders

Discover how AI and machine learning are transforming cybersecurity. Learn about use cases, risks, governance, and strategies to strengthen security, improve efficiency, and stay ahead of evolving threats.

Artificial intelligence (AI) is reshaping cybersecurity at unprecedented speed. According to a study by Arctic Wolf, 73% of organizations worldwide have integrated AI into their security operations, primarily to automate threat detection, response, and prevention.

As cyber threats become more sophisticated, defenders are increasingly relying on AI to outpace adversaries. From real-time log analysis to predictive threat modeling, AI enables faster, more accurate responses than legacy systems ever could.

This article equips IT and security leaders with a comprehensive roadmap for leveraging AI in cybersecurity. In this article, we’ll explore:

- Core use cases across detection, investigation, and incident response

- The inherent risks and governance challenges tied to AI adoption

- Practical strategies to integrate AI responsibly within security operations

- Future-ready frameworks for AI-driven defense and resilience

Let’s dive in and understand how, and why, AI is becoming indispensable to modern cybersecurity.

The Role of AI in Cybersecurity Evolution

Artificial intelligence has moved from being a “nice-to-have” experiment in security operations to becoming a foundational element of modern cybersecurity. As cyberattacks grow more complex and frequent, AI provides IT and security leaders with the ability to detect, predict, and respond to threats faster than traditional methods ever could. Rather than relying solely on manual analysis or signature-based tools, AI introduces speed, adaptability, and intelligence at every layer of defense.

How AI Transforms Cybersecurity

At its core, AI enables three critical advancements in security: detection, prediction, and automated response. Machine learning models trained on massive volumes of threat data can spot anomalies that human analysts might miss, such as subtle variations in network traffic or unusual login behaviors. This makes AI a powerful tool for early-stage threat detection: catching attacks before they escalate into breaches.

Prediction is another key strength. By analyzing historical patterns and real-time telemetry, AI systems can anticipate vulnerabilities that are most likely to be exploited and prioritize remediation. This proactive stance shifts security from a reactive model to one that actively reduces risk exposure.

Finally, automated response capabilities allow organizations to act at machine speed. AI-powered systems can isolate compromised accounts, shut down malicious processes, or revoke suspicious privileges without waiting for human approval.

Beyond high-level strategy, AI also excels at streamlining day-to-day security tasks. Log analysis, for instance, is a resource-intensive process that can overwhelm teams. AI tools can parse millions of log entries in seconds, flagging only those events that require human intervention.

Similarly, vulnerability scanning powered by AI provides more accurate, contextualized results, filtering out false positives and highlighting the most urgent threats. Threat detection benefits as well, with AI models continuously refining their accuracy as they ingest new data from the evolving threat landscape.

By embedding AI into cybersecurity workflows, teams can reduce human error, accelerate detection and response, and reallocate valuable analyst time toward strategic initiatives.

Understanding Machine Learning in Security

Machine learning (ML) is one of the most transformative technologies in modern cybersecurity. Instead of relying solely on static rules or human-driven analysis, ML allows security systems to learn from massive datasets, adapt to new threats, and continuously improve accuracy over time. For IT and security leaders, understanding how ML functions is critical for building resilient environments.

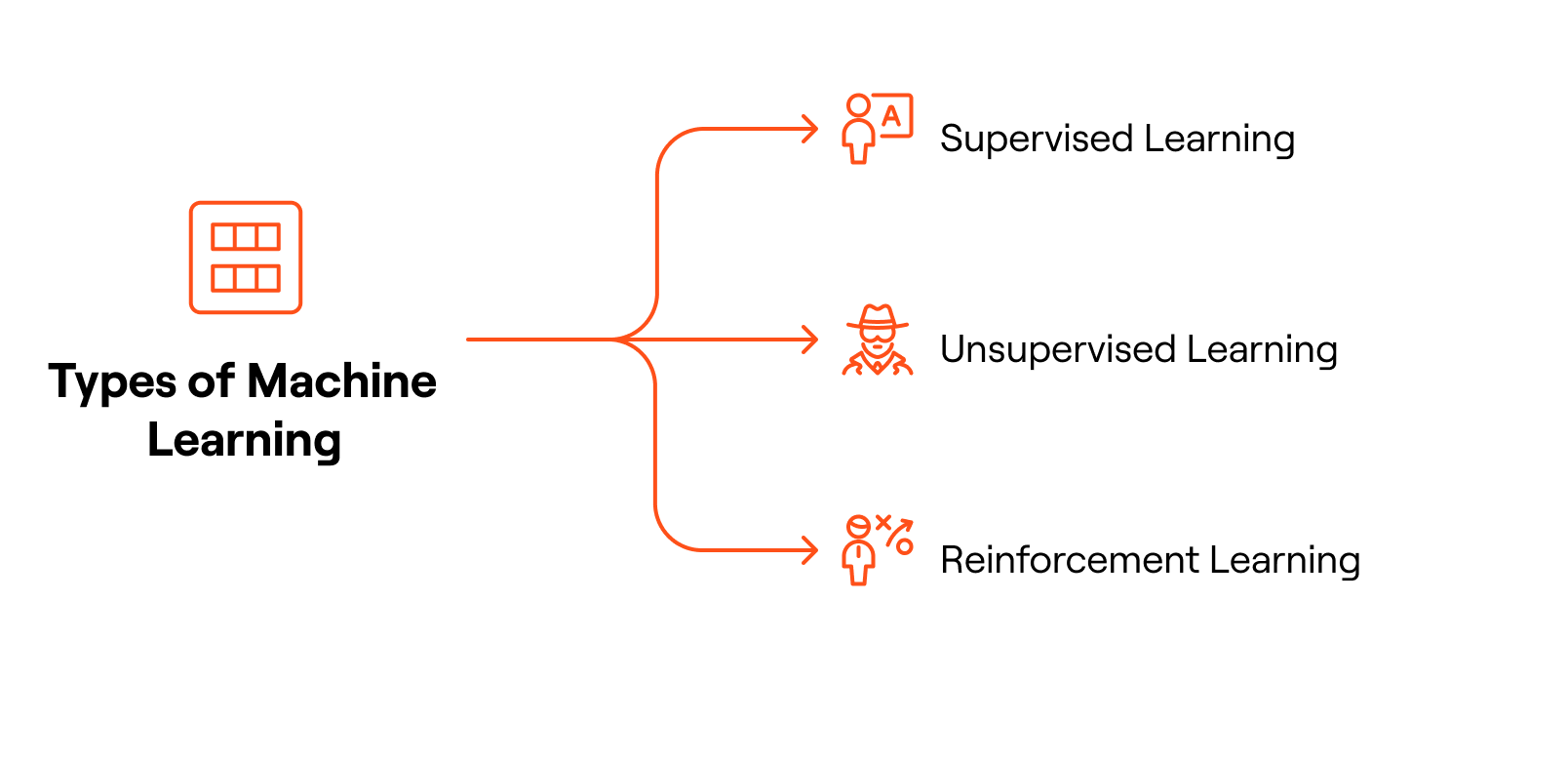

Types of Machine Learning

Machine learning in cybersecurity typically falls into three categories:

- Supervised Learning: Models are trained on labeled datasets where the outcome is already known, such as identifying malicious versus benign files. This approach is particularly effective in malware classification and phishing detection.

- Unsupervised Learning: Algorithms detect patterns and anomalies in unlabeled data, making it valuable for spotting unusual behaviors that may indicate insider threats or zero-day attacks.

- Reinforcement Learning: Systems learn through trial and error by receiving feedback from their actions. In security, this can be used to optimize adaptive defenses, like adjusting firewall policies or intrusion detection systems based on evolving attack patterns.

Together, these methods provide a diverse toolkit for tackling both known and unknown security challenges.

Key Applications in Cybersecurity

Machine learning is reshaping several critical areas of security operations:

- Threat and Anomaly Detection: ML models can analyze real-time network traffic, endpoint activity, and log data to identify suspicious behavior faster than traditional monitoring methods.

- Malware and Vulnerability Analysis: By analyzing code structures and execution patterns, ML tools can classify malware variants and predict vulnerabilities before they are exploited.

- Behavioral and Forensic Use Cases: ML helps profile normal user behavior to flag deviations that may indicate account compromise. It also accelerates forensic investigations by automatically correlating large volumes of event data.

By applying ML across these domains, security teams gain faster insights, reduce false positives, and strengthen their ability to respond proactively to emerging threats.

AI-Driven Use Cases in Cybersecurity

Artificial intelligence has moved from being a buzzword to becoming a core enabler of modern cybersecurity strategies. By applying machine learning, natural language processing, and predictive analytics, AI can help security teams detect threats faster, automate repetitive tasks, and strengthen resilience against sophisticated cyberattacks.

For IT and security leaders, understanding where AI is already being deployed effectively is key to shaping future strategies.

Real-World Implementations

AI-driven security tools are increasingly applied in frontline use cases across IT and enterprise environments:

- Endpoint Protection and Network Traffic Analysis: AI enhances endpoint detection and response (EDR) by continuously analyzing behavioral patterns on devices. When abnormal activity – such as lateral movement or privilege escalation – is detected, AI models flag or automatically contain threats. Similarly, network traffic analysis powered by AI enables real-time anomaly detection, identifying malicious traffic hidden among legitimate flows.

- Email Security (Spam, Phishing, BEC): Traditional spam filters rely on static signatures, but modern AI email security systems leverage NLP and anomaly detection to stop phishing and business email compromise (BEC) attacks. By analyzing context, tone, and user interaction patterns, AI can catch highly targeted spear-phishing attempts that evade rule-based systems.

- AI-Powered SOC Playbooks and Attack Strategy Forecasting: Security operations centers (SOCs) benefit from AI-driven playbooks that automate alert triage, escalation, and response. Instead of analysts manually correlating logs, AI can dynamically recommend the best response paths. Beyond response, predictive AI models analyze past attack patterns to forecast future tactics, techniques, and procedures (TTPs), enabling security teams to harden defenses before adversaries strike.

Together, these implementations show how AI is not just augmenting existing tools, but fundamentally changing how threats are detected, managed, and preempted.

Next-Gen SIEM Transformation

Security Information and Event Management (SIEM) platforms are evolving rapidly with AI integration. Traditional SIEM systems, while valuable, often overwhelm teams with high volumes of alerts and require manual correlation of disparate log data.

AI-enhanced SIEMs address these pain points by:

- Event Correlation: Machine learning models link related alerts across endpoints, networks, and applications, helping analysts see broader attack campaigns rather than isolated events.

- Real-Time Detection: Instead of after-the-fact log reviews, AI-powered SIEMs process data streams continuously, flagging suspicious activity as it happens.

- Noise Reduction: By applying anomaly detection and risk scoring, AI filters out low-value alerts, allowing SOC teams to focus on high-priority incidents.

This transformation positions SIEM as a proactive, intelligence-driven platform rather than a reactive compliance tool. For leaders, adopting AI-enabled SIEM is a critical step toward building adaptive defenses that keep pace with evolving threats.

Risks and Limitations of AI in Cybersecurity

While AI has become a cornerstone of modern cybersecurity strategies, it is far from a silver bullet. For IT and security leaders, it’s critical to balance enthusiasm for AI’s defensive capabilities with awareness of the risks it introduces.

From adversaries weaponizing AI to operational challenges in managing models, organizations must understand where AI can both strengthen and weaken their security posture.

AI as a Double-Edged Sword

Artificial intelligence is not only a defensive asset but also a tool that attackers can exploit. On the defensive side, AI helps security teams detect anomalies, automate threat response, and anticipate attacks through predictive modeling.

However, in the offensive realm, adversaries are already leveraging AI to automate reconnaissance, craft convincing phishing emails, and bypass traditional detection systems. This dual use highlights the growing challenge: as defenders innovate, attackers innovate just as quickly.

Emerging AI Threats

Two fast-growing areas of concern for IT leaders are:

- AI-Driven Vulnerability Discovery: Attackers can use machine learning models to scan systems and identify weak points more effectively than manual testing. This enables them to weaponize exploits faster, compressing the time defenders have to patch vulnerabilities.

- Deepfake Phishing and Autonomous Attacks: Advances in generative AI allow threat actors to create highly convincing deepfake audio and video used in phishing or fraud campaigns. In parallel, autonomous attack bots are being developed to mimic human behavior, launch persistent attacks, and even adapt in real-time to defenses.

These threats raise the stakes for organizations, making it clear that defending against AI-enabled adversaries requires equally advanced defenses.

Operational Risks

Beyond external threats, AI itself introduces operational risks when embedded into cybersecurity workflows:

- ML Model Vulnerabilities: Machine learning systems can be poisoned with manipulated training data, leading to blind spots or deliberate misclassifications. For example, attackers may introduce “adversarial inputs” that cause AI systems to misinterpret malicious activity as benign.

- Data Integrity Challenges: AI models rely on clean, representative data to be effective. If log data, telemetry, or identity signals are incomplete, outdated, or tampered with, the resulting models may produce false positives, false negatives, or biased outputs.

- Over-Reliance on Automation: Security teams risk becoming complacent if they treat AI outputs as unquestionable. Without human validation and context, automated decisions can disrupt operations or miss nuanced threats.

While AI enhances cybersecurity capabilities, it also creates new risks that demand vigilance. IT and security leaders must adopt AI responsibly; embedding it within layered defenses, ensuring robust model governance, and preparing for the reality that adversaries will weaponize the same technologies.

AI in Security Operations

Artificial intelligence is transforming security operations by turning static, reactive environments into dynamic, predictive systems. For IT and security leaders, AI’s ability to enhance Security Information and Event Management (SIEM) platforms and empower Security Operations Centers (SOCs) is proving to be a game-changer. From real-time anomaly detection to mentoring junior analysts, AI is redefining the SOC of the future.

Enhancing SIEM and SOC

Traditional SIEM systems often overwhelm analysts with an endless stream of alerts, many of which turn out to be false positives. AI helps solve this problem by prioritizing alerts based on context, risk, and likelihood of threat escalation. Machine learning models can analyze network traffic, endpoint telemetry, and identity data in real-time to spot unusual behaviors such as lateral movement or privilege escalation attempts.

Instead of alert fatigue, analysts receive smarter, high-fidelity notifications that point directly to probable threats.

AI can also correlate events across disparate systems, revealing attack campaigns that would otherwise be lost in siloed logs. For example, an unusual login from an unfamiliar location combined with anomalous file access could be automatically flagged as a potential insider threat; something traditional rules-based systems might miss.

By integrating AI into SIEM and SOC workflows, security teams gain proactive monitoring capabilities, enabling faster containment and reducing Mean Time to Detection (MTTD) and Mean Time to Response (MTTR).

Generative AI in SOC

While machine learning enhances detection and alerting, generative AI is beginning to act as a virtual mentor inside SOCs. Instead of analysts manually combing through dashboards, generative AI can summarize alerts, explain the likely cause, and suggest step-by-step remediation actions.

For less experienced analysts, this serves as an on-demand training resource, helping them learn investigative techniques while resolving issues. For example, when a suspicious login attempt occurs, generative AI could provide historical context, highlight similar past incidents, and even generate a recommended playbook for response.

Moreover, generative AI can support SOC playbook creation and automation, drafting workflows that senior analysts can refine and deploy. This accelerates operational maturity and ensures consistent responses to recurring threats.

AI is reshaping security operations at every level: from refining the quality of SIEM alerts to serving as an interactive advisor for SOC analysts. As threats grow in speed and sophistication, AI-driven SOCs provide the scalability, adaptability, and intelligence needed to stay ahead.

Governance, Risk, and Compliance Considerations

As AI becomes a central pillar of cybersecurity strategy, IT and security leaders face not only technical challenges but also governance, risk, and compliance (GRC) concerns. AI systems bring enormous potential for detecting and mitigating threats at scale, but without proper oversight, they can introduce risks related to bias, accountability, and regulatory exposure.

Ethical and Regulatory Challenges

The use of AI-driven security tools raises unique ethical and oversight challenges. Unlike traditional security solutions, AI systems operate with a level of autonomy that can make their decisions difficult to explain or audit. For instance, when a machine learning model flags a potential insider threat, leaders need to understand why the system took that action: both to validate accuracy and to meet compliance requirements.

Governance frameworks must therefore include:

- Transparent decision-making: Ensuring explainability in AI models so that human analysts can trace security actions back to clear inputs.

- Bias and fairness checks: Preventing false positives or discriminatory impacts in security monitoring that could affect employees or external users unfairly.

- Defined accountability: Assigning clear roles for when human oversight is required, and ensuring there is no over-reliance on fully autonomous responses.

Without this governance layer, organizations risk exposing themselves to compliance failures, reputational damage, or ineffective security measures that miss nuanced threats.

Regulatory Landscape

Governments and regulators worldwide are beginning to codify standards for AI in security and broader enterprise use cases. One of the most prominent examples is the EU AI Act, which introduces a risk-based classification system for AI tools and imposes strict requirements for high-risk applications, including transparency, oversight, and human-in-the-loop decision-making. For organizations operating internationally, this means AI-driven cybersecurity systems may soon need to meet these legally binding standards.

Other frameworks and standards also shape the compliance landscape:

- NIST AI Risk Management Framework (RMF): provides guidelines for managing risks related to AI trustworthiness, fairness, and accountability.

- ISO/IEC 42001: (Artificial Intelligence Management System Standard) helps organizations establish governance structures around AI deployments.

- Sector-specific regulations: (such as HIPAA in healthcare or PCI DSS in financial services) now require organizations to prove that AI-enabled security controls meet privacy and data protection obligations.

Together, these regulations emphasize that AI security adoption must be paired with compliance readiness. Organizations cannot simply deploy AI and hope it works; they must document processes, justify outcomes, and provide auditable records of security decisions.

AI and Identity Security

Identity remains one of the most targeted attack surfaces in cybersecurity. According to numerous industry studies, the majority of breaches involve compromised credentials. As organizations increasingly adopt AI across security operations, identity security is emerging as a critical area where AI-driven tools can help reduce risks, streamline monitoring, and detect anomalies faster than traditional approaches.

MLSecOps for AI

Securing identity in the age of AI requires a machine learning security operations (MLSecOps) approach. Just as DevSecOps embeds security throughout the development lifecycle, MLSecOps integrates controls across the entire machine learning pipeline: from model training to inference and continuous monitoring.

- Training: During training, sensitive identity data (such as user access logs or entitlement records) may be used to teach models how to recognize suspicious behavior. MLSecOps practices ensure data is anonymized, access to datasets is restricted, and adversarial training is implemented to harden models against manipulation.

- Inference: When models are deployed to evaluate login attempts or privilege escalations, guardrails like explainability and drift detection help prevent misclassifications that could lock out legitimate users or miss insider threats.

- Monitoring: Continuous oversight of models ensures that evolving attack patterns – such as novel credential-stuffing techniques or deepfake-generated phishing attempts – are quickly identified. MLSecOps also ensures automated remediation workflows remain accurate and aligned with compliance obligations.

This lifecycle approach enables IT and security teams to deploy AI safely without introducing new risks into identity environments.

AI in Identity Protection

Beyond securing AI systems themselves, AI is transforming how organizations defend identities from attackers. Modern threats often involve sophisticated tactics such as account takeover, privilege misuse, or lateral movement within hybrid environments. Traditional rule-based monitoring is too static to keep up.

AI-driven identity protection enhances defenses through:

- Advanced anomaly detection: ML models analyze vast amounts of behavioral data, spotting deviations such as unusual login times, impossible travel patterns, or unexpected access requests.

- Contextual risk scoring: AI systems evaluate multiple signals – device posture, geolocation, historical activity – to assign a dynamic risk score, enabling adaptive access policies.

- Proactive prevention: Automated responses, such as step-up authentication when anomalies are detected, help block suspicious activity before it escalates into a breach.

By integrating AI into identity governance and access management, organizations can achieve least-privilege enforcement at scale while reducing the burden on human analysts.

Organizational Readiness and Cyber Resilience

As cyber threats evolve in both volume and sophistication, IT leaders must prioritize not only prevention but also preparedness. AI is increasingly becoming a force multiplier, enabling organizations to strengthen both day-to-day defense and long-term resilience. By blending AI-driven support with robust recovery strategies, enterprises can scale their security operations while maintaining the agility needed to withstand and bounce back from attacks.

AI-Driven Analyst Support

One of the biggest challenges in modern security operations is the sheer volume of alerts and data. Human analysts can quickly become overwhelmed by false positives, fragmented signals across tools, and the need to correlate events in real time. AI addresses this bottleneck by augmenting human decision-making rather than replacing it.

- Triage at scale: Machine learning models can filter noise from real threats, classifying alerts based on severity, context, and historical patterns.

- Decision acceleration: AI-driven recommendations help analysts prioritize actions, such as: escalating an incident, initiating containment steps, or dismissing benign anomalies.

- Skill multiplier: For junior analysts, generative AI can serve as a virtual mentor, providing guidance on best practices and next steps. For experienced teams, it helps scale expertise to cover broader environments.

By pairing automation with human oversight, AI allows security teams to focus on high-impact tasks, reducing burnout while improving response speed and accuracy.

Cyber Resilience and Recovery

Even with strong defenses, incidents are inevitable; from ransomware and phishing campaigns to insider threats and cloud misconfigurations. Cyber resilience is about maintaining vigilance while ensuring the ability to recover quickly. AI contributes to this resilience in several ways:

- Continuous monitoring: Predictive analytics spot deviations early, such as unusual network traffic or privilege escalations, allowing teams to take proactive measures.

- Automated containment: AI-driven workflows can isolate compromised devices, revoke risky credentials, or block malicious IPs in real time, reducing blast radius.

- Faster recovery: Machine learning models analyze prior incidents to recommend recovery playbooks and simulate outcomes, helping teams refine response strategies.

- Adaptive learning: Post-incident reviews feed back into AI models, ensuring that defenses improve with every attack faced.

This approach transforms cybersecurity from a reactive function into a resilient, adaptive system that balances prevention with rapid response and recovery.

Harnessing AI for a Smarter, Safer Identity Strategy

AI is essential in modern cybersecurity. From anticipating threats with predictive analytics to automating response workflows, AI allows IT and security teams to move faster, smarter, and with greater precision. But this innovation also introduces new threats: adversarial AI, synthetic identities, and increasingly complex identity-based attacks. To stay secure, organizations must adopt AI not just as a tool; but as a core pillar of their identity governance strategy.

Lumos leads the way by embedding AI at the foundation of identity governance. Our Autonomous Identity Platform goes beyond detection: it orchestrates identity workflows, enforces least-privilege access, and monitors human and non-human identities across your SaaS, cloud, and on-prem stack. With Lumos’ AI identity agent Albus, organizations can uncover hidden access risks, automate policy enforcement, and gain contextual, real-time insights that drive faster, more informed decisions.

While traditional identity platforms struggle with scale and complexity, Lumos makes it easy to govern access intelligently; with no-code workflows, Slack-native approvals, and AI-backed automation that reduces manual overhead and audit fatigue.

Ready to put AI at the center of your security posture? Book a demo with Lumos and discover how AI-powered identity governance can help you secure access, reduce risk, and scale operations with confidence.

Try Lumos Today

Book a 1:1 demo with us and enable your IT and Security teams to achieve more.

.avif)