Agentic AI and Identity Governance: What You Need to Know

Discover how to build secure, scalable identity governance for agentic AI. This guide explores modern identity frameworks, dynamic credential management, and zero-trust strategies to help IT and security leaders manage AI-driven environments effectively.

Autonomous AI agents are no longer futuristic. In fact, according to Dimensional Research, over 80% of companies are already deploying intelligent AI agents – such as chatbots, automated workflows, or decision-making systems – across their operations, with adoption expected to skyrocket in the coming years.

As we navigate this era, Agentic AI presents new identity governance challenges. Traditional IAM models built for human users or static machine identities fall short when applied to these dynamic, context-aware agents.

This guide arms IT and security leaders with a forward-looking identity framework tailored to Agentic AI. We’ll explore why Agentic AI demands new governance strategies, expose the limitations of legacy protocols, and outline the principles of a secure, scalable identity architecture for agentic environments.

What Is Agentic AI in Identity?

Agentic AI in identity refers to autonomous, goal-oriented AI systems that can initiate actions, make decisions, and respond to changing conditions without requiring continuous human input. Unlike traditional software automation, agentic AI possesses the ability to plan, reason, and adapt based on context. In the identity and access management (IAM) world, this means AI agents can request access, provision accounts, rotate credentials, detect anomalies, or orchestrate workflows on behalf of users, systems, or other agents.

As organizations adopt increasingly dynamic, distributed environments, agentic AI introduces both transformative opportunities and significant governance challenges.

Reactive Agents

Reactive agents operate solely in response to environmental stimuli. They do not plan or maintain long-term memory; instead, they evaluate conditions and perform predefined actions. In identity use cases, a reactive agent might automatically revoke access when a security threshold is breached or provision entitlements when an onboarding trigger fires. These agents are fast and reliable but limited in complexity.

Deliberative Agents

Deliberative agents incorporate reasoning, planning, and goal-oriented decision-making. They evaluate available information, consider alternative actions, and execute strategies aligned with defined objectives. In identity governance, a deliberative agent might assess risk signals, evaluate policies, and determine the minimal access set needed for a user to complete a task; acting with far more autonomy than traditional rule engines.

Hybrid Agents

Hybrid agents combine reactive adaptability with deliberative planning. They can respond instantly to events while also optimizing decisions based on broader context. This makes them particularly suited for identity environments where real-time security actions (reactive) must coexist with long-term governance strategies (deliberative). For example, a hybrid agent could grant temporary just-in-time (JIT) access while also generating a future recommendation to refine role definitions.

Multi-Agent Systems

Multi-agent systems involve multiple AI agents interacting, collaborating, or even negotiating with one another to achieve complex goals. Within identity, these systems could coordinate across provisioning, risk scoring, anomaly detection, and policy orchestration. The collective intelligence of multiple agents allows for automated end-to-end governance workflows – something traditional IAM tools cannot achieve.

Characteristics of Agentic Identity

Agentic identity is defined by autonomy, context-awareness, delegated authority, and continuous adaptation. Agentic AI systems maintain their own identity credentials, request permissions, justify actions, and communicate with other agents. They make decisions based on both static factors (roles, attributes) and dynamic ones (risk, context, behavior). This marks a shift from “identity as an attribute of a user” to “identity as a capability of autonomous systems.”

The Limitations of Traditional Identity Frameworks

As organizations adopt Agentic AI, the cracks in traditional identity frameworks like OAuth, SAML, and static IAM models are becoming increasingly visible. These standards were designed for human users and predictable applications, not self-directed AI entities with evolving contexts and dynamic access needs. Understanding where these frameworks fall short is critical for IT and security leaders planning next-generation identity governance.

Challenges with Legacy Standards

Protocols like OAuth and SAML are effective for conventional web and enterprise applications, but they struggle with the dynamic demands of AI agents.

- OAuth Limitations: OAuth was built for delegated access between human users and services, often relying on long-lived tokens or refresh tokens. For autonomous AI agents that spin up or down frequently, these static models create risk and unnecessary exposure.

- SAML Limitations: SAML’s XML-heavy approach is optimized for browser-based, human-driven sessions; not for ephemeral, machine-to-machine interactions where agents need just-in-time access.

- Static Sessions vs. Dynamic Lifecycles: Legacy protocols assume a stable session lifecycle, whereas AI agents may initiate thousands of micro-interactions across services in real time. Static session models are too rigid, leading to either overprovisioning (too much access for too long) or operational bottlenecks.

Security Gaps in Static Architectures

Beyond technical inflexibility, traditional identity frameworks leave security blind spots when applied to AI agents.

- Inadequate Trust Assumptions: Legacy IAM assumes predictable, human-driven activity. AI agents, however, operate autonomously, adapt to conditions, and can evolve access needs mid-session. Without continuous verification, this creates avenues for privilege misuse or drift.

- Lack of Continuous Validation: Traditional frameworks often rely on point-in-time authentication at login. For autonomous AI, that’s insufficient. Continuous, context-aware validation is required to ensure an agent is still authorized, behaving within defined policies, and not compromised.

Legacy standards like OAuth and SAML remain foundational for human-centric identity management, but they’re misaligned with the ephemeral, autonomous, and context-driven nature of AI agents. Moving forward, identity governance must evolve from static, one-time models toward continuous, adaptive, and policy-based approaches that can keep pace with Agentic AI.

What Makes Agentic and AI Agents Unique

Agentic AI and autonomous agents are fundamentally different from traditional software or even rule-based automation. For IT and security leaders, this distinction is critical: it reshapes how identity, governance, and compliance must be approached. Unlike human users or static applications, these agents adapt in real time, act independently, and interact across ecosystems in ways that legacy identity frameworks were never designed to handle.

Dynamic and Ephemeral Identities

Traditional identity models assume long-lived users, roles, and sessions. Agentic AI breaks that assumption.

- Dynamic identities: AI agents may generate new identifiers or context-specific credentials on demand, depending on their assigned tasks.

- Ephemeral lifecycles: Some agents only exist for minutes or even seconds—spinning up to complete a micro-task, then dissolving.

This fluidity makes it impossible to rely on static entitlements or one-time provisioning. Instead, governance must adapt toward just-in-time identity assignment and continuous validation.

Autonomous Decision-Making

One of the defining features of agentic AI is its ability to act without human initiation.

- Agents analyze data, evaluate conditions, and make decisions independently.

- They may grant themselves access to systems, initiate workflows, or trigger downstream automations.

This autonomy amplifies both efficiency and risk: while it reduces reliance on human oversight, it also requires strong policy-based guardrails to prevent privilege escalation, unauthorized data movement, or unintended business impacts.

Blurring the Line Between Human and Machine Activity

As AI agents collaborate alongside human users, the distinction between who, or what, is performing an action becomes increasingly complex.

- Audit trails may need to capture both the AI’s reasoning and the final action, not just a simple access log.

- Security teams face the challenge of attributing responsibility across hybrid environments where agents and humans share tasks.

This “identity blur” heightens the need for context-rich monitoring and granular auditability, ensuring accountability while preserving operational agility.

Non-Human Identities (NHIs) and the Agentic Identity Lifecycle

As organizations adopt more automation and AI-driven systems, non-human identities (NHIs) now outnumber human identities in most enterprise environments. These NHIs include service accounts, APIs, workloads, machine agents, bots, and now autonomous AI agents; each requiring unique authentication, authorization, and lifecycle management controls. In the context of agentic AI, NHIs evolve beyond static system accounts and become dynamic actors capable of initiating actions, requesting access, and interacting with other systems without direct human oversight. This shift amplifies both the value and risk associated with machine identity governance.

The Expanding Role of Non-Human Identities

Traditional NHIs were predictable and largely configuration-based. Their permissions changed infrequently, and they generally performed narrow, predefined tasks. Agentic AI disrupts this model by introducing autonomous machine actors that can learn, adapt, and take initiative. These agents often:

- Interact with multiple applications and APIs

- Generate or manage other NHIs

- Request or escalate privileges as needed

- Analyze context and modify their actions accordingly

This level of autonomy fundamentally increases the blast radius of misconfigurations or compromised credentials. As a result, NHIs now require the same rigor in identity governance as human identities, if not more.

Provisioning NHIs in Agentic Systems

Provisioning a new agentic identity involves more than simply creating a service account. It requires establishing the identity’s purpose, scope, permissions model, and environmental constraints. Effective provisioning includes:

- Assigning least-privilege entitlements based on the agent’s operational domain

- Defining behavioral boundaries (e.g., systems it can access, transactions it can perform)

- Generating secure credentials, such as API keys, tokens, or certificates

- Configuring continuous monitoring for anomalies or policy violations

Provisioning must also account for the agent’s potential to evolve, interact with other systems, or create additional tasks that change its identity footprint.

Deprovisioning and Deactivation

Deprovisioning NHIs becomes more complex when agents are autonomous. A dormant or abandoned machine identity can persist quietly for months or years, which creates a high-risk entry point for attackers. Proper deprovisioning requires:

- Detecting unused or outdated agent identities

- Revoking all associated permissions and tokens

- Ensuring dependent systems or agents no longer reference the identity

- Documenting the deactivation for audit readiness

Without strict enforcement, NHIs can accumulate and lead to shadow access or privilege creep across the environment.

Token and Credential Lifecycle Management

Agentic systems rely heavily on tokens, secrets, and certificates for authentication. Managing these credentials is critical to preventing unauthorized access. A secure token lifecycle includes:

- Automated rotation of API keys, OAuth tokens, and certificates

- Expiration and renewal policies tied to agent roles or behaviors

- Scoped, time-bound tokens to limit unnecessary standing privileges

- Revocation workflows triggered by anomalies, deprovisioning events, or risk scores

Agentic AI may also initiate token requests, meaning governance frameworks must incorporate automated approval or verification mechanisms to ensure access remains controlled.

Defining an Agentic User and Credential Flow

In traditional identity management, credentials are long-lived and tied to static users or applications. Agentic AI shifts this paradigm. Here, users may be human or machine agents, and their access is often context-dependent, dynamic, and short-lived. Understanding how an “agentic user” flows through the identity lifecycle is critical for IT and security leaders preparing governance models that can keep pace with AI-driven autonomy.

An agentic user refers to an autonomous digital entity – powered by AI – that can request, use, and even revoke its own access to systems and data. Unlike human users, these agents may operate at machine speed, spin up new processes without manual initiation, and dissolve once their purpose is complete. To govern them securely, identity frameworks need a credential flow that is both flexible and risk-aware, balancing autonomy with control.

Key Stages in the Credential Flow

The credential flow for agentic users is not a simple “grant and revoke” process. Rather, it is a continuous lifecycle that adapts to dynamic identities and autonomous actions.

Each stage plays a role in ensuring that access is secure, contextual, and temporary, while still maintaining auditability and compliance. By breaking down the process into clear steps, IT and security leaders can better understand how to design guardrails that support agility without sacrificing governance. Here are the key stages in the credential flow:

- Delegation and Authentication

- Just-in-Time Provisioning

- Policy Evaluation with PDP/PEP Models

- Logging, Observability, and Audit Generation

Delegation and Authentication

The process begins when an agent receives delegated authority from a human, another agent, or a governing system. Authentication must be lightweight yet strong, leveraging cryptographic assertions or identity provider (IdP) trust relationships, to establish the agent’s legitimacy. Unlike static passwords, credentials are ephemeral, bound to tasks, and subject to contextual validation.

Just-in-Time Provisioning

Rather than pre-assigning broad entitlements, just-in-time (JIT) provisioning grants access only when needed, for the exact duration of a task.

For example, an AI agent analyzing sensitive customer data may be issued a temporary role with narrowly scoped permissions. Once the task concludes, access is revoked automatically, reducing the attack surface and preventing standing privileges.

Policy Evaluation with PDP/PEP Models

To ensure compliance and minimize risk, every credential request and action must pass through policy decision points (PDPs) and policy enforcement points (PEPs). These mechanisms evaluate contextual rules – such as time, location, sensitivity of data, and agent trust score – before allowing or denying access.

By embedding policy engines into the flow, organizations gain real-time governance that scales with autonomous workloads.

Logging, Observability, and Audit Generation

A cornerstone of secure agentic identity is transparency. Every credential request, approval, and usage event must generate detailed logs enriched with context (who/what requested access, under what policy, and for how long).

These logs feed into observability platforms, enabling anomaly detection, compliance reporting, and forensic investigations. Crucially, they provide the accountability layer that ensures autonomous agents remain governable.

Architecture of Agentic AI and Identity

Agentic AI introduces an identity model built around autonomous decision-making, contextual awareness, and continuous interaction with digital systems. To understand how these systems securely operate within enterprise environments, IT and security leaders need a clear view of the architectural components that power agentic behavior.

Four core layers – perception, planning, action, and memory – work together to allow an agent to sense its environment, determine the best course of action, execute tasks, and retain context across interactions. When mapped to enterprise identity systems, these layers shape how an agent authenticates, authorizes, and governs its own operations.

Perception Layer

The perception layer acts as the agent’s sensory input system, allowing it to observe and interpret signals from the environment. These inputs include identity attributes, authorization states, resource availability, security policies, behavioral patterns, and system events.

In identity governance, the perception layer enables the agent to:

- Detect changes in user or system context (location, device, network, behavior)

- Evaluate current access rights against policy baselines

- Monitor identity lifecycle events such as role changes or provisioning tasks

- Identify anomalies or potential access risks

This layer forms the foundation of autonomous access decision-making by ensuring the agent has accurate, real-time awareness of its environment.

Planning Engine

The planning engine is the agent’s reasoning center: responsible for evaluating inputs, predicting outcomes, and deciding which actions to take. In identity contexts, the planning engine interprets policies, risk scores, workflows, and compliance rules to determine the optimal next step.

Capabilities include:

- Assessing whether a user or system should receive additional access

- Determining when to escalate or request just-in-time privileges

- Prioritizing remediation tasks such as deprovisioning or certification reminders

- Building multi-step strategies for complex identity workflows

The planning engine ensures that the agent acts not only automatically, but intelligently to make choices aligned with governance objectives.

Action Engine

Once a plan is formed, the action engine executes it. This layer interfaces directly with identity systems, APIs, infrastructure tools, and governance platforms to carry out tasks with precision and speed.

Typical identity-related actions include:

- Provisioning or deprovisioning user or machine accounts

- Adjusting access rights or triggering conditional access workflows

- Initiating security controls such as MFA prompts or privilege revocation

- Creating or updating audit records and compliance documentation

The action engine must operate within strict controls and guardrails to ensure changes remain compliant, traceable, and reversible.

Memory and Context Management

The memory layer allows the agent to retain and reference knowledge across time, which is essential for learning, adaptation, and continuity. In an identity governance framework, memory may store:

- Historical access decisions and their outcomes

- User behavior patterns or identity baselines

- Policy interpretations and common workflow paths

- Role or entitlement mappings learned through pattern recognition

Context management helps the agent understand not only what is happening but why, enabling it to adjust behavior, improve decision accuracy, and avoid repeating errors. Memory also supports auditing by preserving decision rationale and event history.

Bringing the Layers Together

When these architectural layers operate in unison, agentic AI becomes capable of delivering autonomous identity governance: continuously evaluating context, formulating decisions, taking controlled actions, and learning from outcomes. This architecture marks the shift from static, manual access processes to dynamic, self-regulating identity ecosystems where agents can manage permissions, respond to threats, and maintain least-privilege at scale.

Building a Modern Identity Architecture for AI Agents

As AI agents increasingly operate with autonomy, organizations need to rethink how AI in identity governance is structured. Traditional, static frameworks fail to capture the dynamic, ephemeral nature of these entities.

A modern identity architecture must therefore balance speed and flexibility with security and compliance, ensuring that AI-driven activity is properly authenticated, authorized, and auditable.

Two pillars stand out: context-aware least-privilege access and comprehensive lifecycle management.

Context-Aware, Least-Privilege Access

At the heart of a modern architecture is context-aware access control. Unlike human users, AI agents may spin up hundreds of sessions per day across cloud services, databases, and SaaS platforms. Granting broad, persistent entitlements exposes organizations to risk, especially when agents operate continuously.

To mitigate this, identity systems should issue short-lived credentials: ephemeral tokens that expire quickly, forcing revalidation at frequent intervals. This approach ensures that if a credential is compromised, the blast radius is minimized.

Equally important is granularity in policy enforcement. Rather than a binary allow/deny model, modern architectures rely on fine-grained access rules. These policies evaluate contextual signals like the agent’s task, data sensitivity, time of request, and environment (e.g., cloud region, network security posture). By binding access to specific conditions, organizations reduce the likelihood of privilege misuse while still enabling agents to perform their functions effectively.

In practice, this may look like allowing an AI agent access to a database only to read anonymized data during business hours, while restricting write or export capabilities unless additional approvals are triggered. This model reflects zero-trust principles while maintaining operational agility.

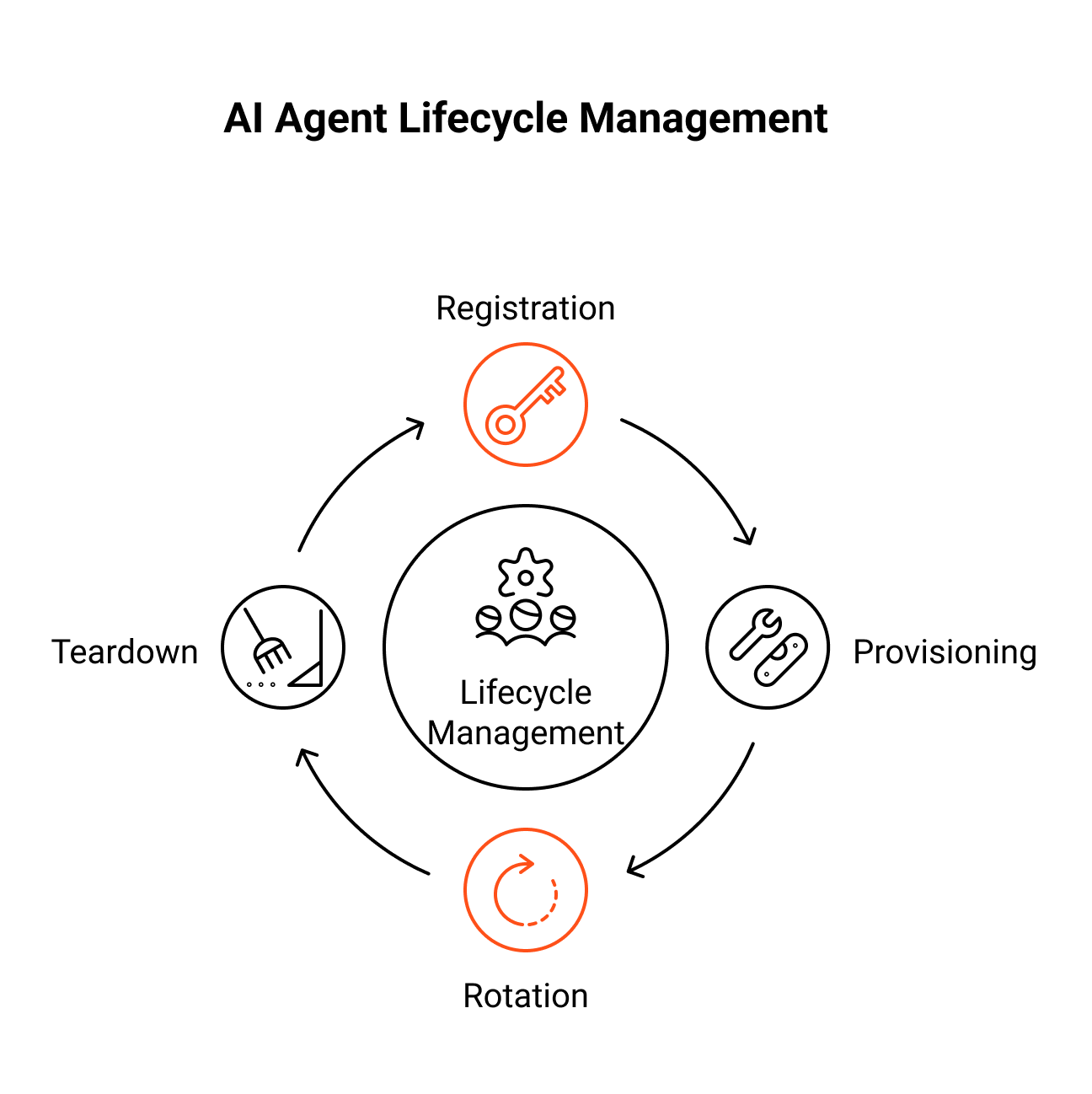

Lifecycle Management

Another cornerstone is lifecycle management for agent identities. Just as human users follow a joiner-mover-leaver (JML) model, AI agents require their own tailored process. The lifecycle includes four stages:

- Registration – Defining the agent, assigning its unique identity, and mapping it to its purpose within the organization. This step establishes accountability and provides the foundation for traceability.

- Provisioning – Assigning entitlements and policies aligned to the agent’s role. Unlike static provisioning, these rights should adapt to the agent’s context and may be granted just-in-time to minimize exposure.

- Rotation – Periodic renewal of keys, certificates, and credentials to prevent long-lived secrets from becoming an attack vector. Automated rotation should be enforced to keep pace with the scale and speed of agent activity.

- Teardown – Decommissioning the agent’s identity when it is no longer needed, including revoking credentials, wiping entitlements, and archiving activity logs for audit purposes.

Lifecycle management ensures that agent identities remain governed, transparent, and auditable throughout their existence. Without these controls, organizations risk accumulating “ghost agents” that retain unmonitored access long after their purpose has expired.

Protocols and Standards for AI Agent Identity

As AI agents become more autonomous and prevalent across IT ecosystems, the need for standardized identity protocols has never been greater. Traditional methods designed for human-centric workflows are being stretched to their limits. Modern standards must adapt to dynamic agent lifecycles, continuous authentication, and fine-grained authorization to ensure secure and compliant operations.

Evolving Standards

Protocols like OAuth 2.1 and OpenID Connect (OIDC) remain the foundation of modern identity management, but their application is evolving for AI agents. OAuth 2.1 builds on earlier iterations by simplifying flows, strengthening token handling, and addressing security gaps. For AI agents, this means more robust protections when requesting and using access tokens in highly distributed environments.

OpenID Connect adds an authentication layer on top of OAuth 2.1, enabling AI agents to assert identity in a standardized way. This is particularly important when agents must act on behalf of users or organizations across multiple platforms. OIDC’s support for claims-based identity enables the encoding of attributes like role, purpose, or contextual conditions within tokens.

Together, OAuth 2.1 and OIDC provide a strong baseline, but extending them for AI-driven use cases will require tighter integration with policy engines and real-time context evaluation to handle ephemeral, machine-to-machine interactions.

Federation and Token Scoping

One of the biggest challenges for AI agent identity is the ability to federate identities across systems while maintaining strict boundaries. Agents often operate across cloud providers, SaaS platforms, and internal IT systems, requiring secure and scalable federation mechanisms.

Secure token exchange is a central feature here. Through token exchange flows, an AI agent can trade one token for another, scoped specifically to the target service and task. This prevents over-provisioning and ensures that tokens are limited in scope, duration, and privileges. For example, an AI agent analyzing data in a SaaS application might receive a token that permits read-only access to a single dataset for 15 minutes, rather than a broad, persistent credential.

Federation also relies on identity assertions: standardized statements that convey the legitimacy of an agent and its current context. These assertions enable trust between federated systems, ensuring that the requesting agent has been authenticated and authorized according to the originating domain’s policies.

When combined, token scoping and federation offer a pathway to least-privilege, context-aware access that can scale across hybrid and multi-cloud ecosystems.

Identity Risks and Threats of Agentic AI

As agentic AI becomes increasingly embedded in identity ecosystems, it introduces a new class of risks that extend beyond traditional user or machine identity threats. These systems act with autonomy, make decisions without human intervention, and coordinate across distributed environments.

While this autonomy offers efficiency and scale, it also expands the potential attack surface; especially if guardrails, oversight, and access boundaries are not rigorously enforced. Below is a deeper examination of the key identity risks introduced by agentic AI and why they matter for modern IT and security teams, including:

- Overprivilege and Excessive Autonomy

- Credential Misuse and Token Exposure

- Asynchronous Workflows and Race-Condition Exploits

- Lateral Movement Through Interconnected Agents

- Chains of Autonomous Agents and Emergent Behaviors

Overprivilege and Excessive Autonomy

One of the most significant risks stems from granting agentic systems more privileges than required. Because agentic AI often performs multi-step workflows, organizations may assign broad, persistent permissions to ensure it can function without interruption.

This creates several risk scenarios:

- Privilege escalation: A compromised agent could manipulate its own access or request unauthorized privileges.

- Unbounded authority: Excess permissions allow an agent to make identity decisions outside intended scopes, such as modifying SoD-sensitive entitlements or altering policy logic.

- Shadow governance: Autonomous agents with poorly monitored rights may silently introduce access changes with no human oversight.

Overprivilege amplifies the potential blast radius of any malfunction or compromise and must be controlled through least-privilege enforcement, JIT permissions, and scope-limited roles.

Credential Misuse and Token Exposure

Agentic AI systems typically rely on API keys, tokens, service credentials, or delegated authorizations to perform tasks. These credentials are attractive targets for attackers because they often:

- Have broad access across identity systems or apps

- Are long-lived or unrotated

- Are embedded in pipelines, scripts, or agent runtimes

- Operate without human behavioral baselines, making misuse harder to detect

If an attacker steals an agent’s credentials, they inherit the agent’s full authority—potentially allowing them to automate malicious actions at scale.

Asynchronous Workflows and Race-Condition Exploits

Agentic systems frequently handle asynchronous, multi-threaded identity workflows. While efficient, this opens the door to:

- Race-condition vulnerabilities, where attackers exploit timing gaps between steps (e.g., deleting logs before revocation completes).

- Inconsistent state management, causing mismatched entitlements across systems.

- Execution drift, where different workflow branches execute simultaneously without proper reconciliation.

These timing and sequencing risks can cause policy violations, orphaned access, or unexpected privilege propagation.

Lateral Movement Through Interconnected Agents

Agentic AI rarely operates in isolation. Multi-agent ecosystems—each agent handling provisioning, policy enforcement, anomaly detection, or access reviews—create complex chains of interdependent authority.

This raises new identity threats:

- Agent-to-agent compromise: If one agent in the chain is compromised, attackers may move laterally to others through shared APIs or cross-agent permissions.

- Cascading failures: A misconfigured agent might trigger unintended actions across others, amplifying its impact.

- Distributed access sprawl: Multiple agents may inadvertently grant overlapping or redundant access if coordination mechanisms are weak.

As agent ecosystems scale, a single point of weakness can ripple across the identity fabric.

Chains of Autonomous Agents and Emergent Behaviors

Multi-agent systems can exhibit emergent behaviors—actions that arise from agent interactions but were not explicitly programmed. In identity contexts, this may include:

- Unexpected policy interpretations due to conflicting signals

- Self-reinforcing access decisions (e.g., one agent approves access another predicted as necessary)

- Runaway automation loops, especially when agents trigger each other’s workflows

Without monitoring and guardrails, emergent agent behavior can unintentionally override human governance or introduce new attack vectors.

Security, Audit, and Governance in Agentic AI

Identity governance strategies must expand beyond human users to address the unique risks posed by autonomous systems. Securing agentic AI requires not only strong authentication and authorization, but also continuous oversight, lifecycle management, and auditable transparency. Below are the critical pillars IT and security leaders should prioritize.

- Secure Provisioning and Credential Management

- Continuous Logging and Validation

- Audit and Accountability

Secure Provisioning and Credential Management

Managing keys, tokens, and credentials for AI agents requires a fully automated lifecycle approach. Unlike human identities, agent identities may spin up and down in seconds, making manual processes both impractical and insecure.

Automated provisioning ensures agents receive short-lived, context-aware credentials at the moment of need. Equally important, automated rotation and teardown eliminate stale tokens and reduce the attack surface. By enforcing JIT access, organizations can ensure agents only operate within narrowly defined scopes, significantly minimizing privilege escalation risks.

Continuous Logging and Validation

Static, point-in-time security checks are insufficient for autonomous agents. Instead, organizations must implement continuous logging and validation mechanisms that provide real-time visibility into agent behavior. By capturing every action – such as API calls, data retrievals, and system interactions – security teams can build baselines of expected activity and quickly flag anomalies.

Machine learning models can further enhance monitoring by detecting unusual access patterns or privilege misuse. Continuous validation also ensures that trust decisions remain dynamic, adapting to changing contexts such as environment, time, or workload sensitivity.

Audit and Accountability

For both regulatory and internal risk management purposes, agent activity must be transparent and forensically sound. Comprehensive audit trails enable organizations to attribute actions to specific agents, providing accountability in environments where machine-driven decisions could otherwise become opaque. Immutable logs, enriched with contextual metadata (identity assertions, token scopes, time-stamped events), provide the forensic integrity required during audits, investigations, or compliance reviews.

Beyond reactive use, these records are essential for proactive governance, allowing leaders to assess whether access policies and delegation frameworks are functioning as intended.

Use Cases and Applications of Agentic AI in Identity

Agentic AI is transforming enterprise operations by enabling systems that can understand context, make decisions, and take autonomous action across complex digital environments. In identity and security workflows, these agents streamline processes, reduce human overhead, and improve precision; especially in environments where traditional rule-based automation struggles to scale.

Below are practical, high-impact use cases demonstrating how agentic AI is reshaping security operations, IT management, and organizational productivity.

Security Operations

Security teams are among the earliest adopters of agentic AI due to the need for continuous monitoring, rapid incident response, and real-time decision-making. Agentic AI enhances SOC capabilities by acting as an intelligent, always-on analyst capable of interpreting signals at machine speed.

Key applications include:

- Automated Threat Investigation: An agent can analyze alerts, correlate logs, check identity context, and determine whether an event is benign or risky.

- Adaptive Access Responses: When AI detects suspicious behavior (e.g., impossible travel or anomalous privilege use), it can autonomously trigger step-up authentication, session isolation, or temporary access suspension.

- Incident Containment: Agents can revoke entitlements, rotate credentials, quarantine compromised accounts, and notify human analysts to dramatically reduce mean time to respond (MTTR).

- Continuous Identity Risk Scoring: By tracking user behavior, agentic AI assigns real-time risk scores that inform access decisions and governance workflows.

These capabilities shift SOC teams from reactive triage to proactive and preventive security.

IT Automation and Operational Efficiency

IT operations involve thousands of repetitive, rule-driven identity and access tasks that are ideal candidates for agentic automation. By delegating operational workflows to autonomous agents, organizations reduce ticket queues and free IT staff to focus on strategic initiatives.

Common examples include:

- Automated Joiner/Mover/Leaver Workflows: Agents provision, modify, and remove user access based on HRIS triggers, role changes, and dynamic policies.

- Routine System Maintenance: Tasks like password rotations, stale account cleanup, and software provisioning can run autonomously with minimal oversight.

- Configuration Drift and Access Drift Correction: Agents detect deviations from policy baselines and automatically remediate inconsistencies.

- Policy Enforcement: When access violations or SoD risks appear, AI can adjust permissions or recommend safer alternatives.

By autonomously orchestrating end-to-end identity workflows, agentic systems significantly reduce operational burden.

Customer Support and Workforce Assistance

Agentic AI is also redefining how employees and customers interact with service systems: moving from scripted chatbots to adaptive, context-aware support agents.

Enterprise use cases include:

- Self-Service Access Requests: Instead of ticketing queues, users interact with AI assistants (in Slack, Teams, or web portals) that understand intent and initiate the appropriate access workflows.

- IT Troubleshooting Agents: AI diagnoses common issues (VPN access, MFA resets, locked accounts) and resolves them autonomously.

- HR and Onboarding Assistants: Agents guide new hires through setup tasks, provision accounts, answer questions, and ensure compliance.

- Customer Authentication and Support: AI-driven identity verification, passwordless login orchestration, and adaptive fraud detection improve both security and user experience.

These agents reduce dependency on human support teams and elevate the quality of service.

Enterprise Productivity and Cross-System Coordination

Agentic AI can act as a coordinator across dozens of apps, services, and identity providers, which is something traditional automation cannot achieve without brittle integrations.

Examples include:

- Multi-System Governance Automation: Agents reconcile differences between IdPs, HR systems, SaaS apps, and directories to ensure consistency.

- Workflow Orchestration: Agents chain complex tasks across multiple platforms, such as setting up a new environment for a developer or creating project-specific resource groups.

- Predictive Access and Resource Recommendation: Based on patterns, AI suggests access users may need next, aligns with least-privilege principles, and can request approval autonomously.

These capabilities make agentic AI a force multiplier for distributed teams and dynamic IT environments.

Preparing for Identity in the Era of Agentic AI

Agentic AI is changing the identity landscape. Unlike traditional users, autonomous agents operate with unpredictable behaviors, dynamic permissions, and access needs that shift in real time. Legacy identity systems can’t keep up with the velocity or complexity of today’s AI-driven environments. To secure this new frontier, organizations must adopt identity frameworks that deliver continuous validation, automated policy enforcement, and full visibility across both human and non-human identities.

Lumos is built for this moment. As the Autonomous Identity Platform, Lumos provides the critical capabilities needed to govern agentic systems at scale: automated JML workflows, least-privilege enforcement, AI-driven access insights, and deep auditability. Whether onboarding a new hire or provisioning an AI agent, Lumos unifies lifecycle management and access control into a single platform that scales across 300+ cloud, SaaS, on-prem, and hybrid environments.

Lumos doesn’t just support agent governance – it makes it safe, scalable, and intelligent. With Albus, our AI identity agent, you can surface access risks, flag misaligned permissions, and continuously refine policies based on real-world usage. For enterprises looking to balance innovation with control, Lumos provides the operational guardrails needed to deploy agentic AI responsibly.

Ready to future-proof your identity strategy for the age of AI? Book a demo with Lumos and see how we help you secure every identity – human or machine – with automation, context, and confidence.

Try Lumos Today

Book a 1:1 demo with us and enable your IT and Security teams to achieve more.